As a BI Consultant, I've been hearing about the R programming language used in analytics but put off actually learning it. Then I saw a book on R and decided the time had come to finally delve in. Here are a couple of thoughts I would like to share.

Ah, It's a Scripting Language - Whew!

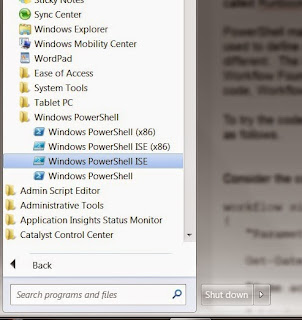

The first thing I discovered is that R is a scripting language like PowerShell, BASH, Perl, and Python to name a few. This was a relief for me as I have done a lot of work with PowerShell so the semantics of R would be familiar. Actually, as I learned about R, I became convinced that Microsoft got some of its PowerShell ideas from R. For example, R has a command line interpreter called the CLI and an integrated scripting environment (ISE) just as PowerShell does. In fact, I found the R ISE called R Studio to be very similar to the PowerShell ISE. As a scripting language, R is designed to be very interactive so working one line at a time can be very effective for some tasks whereas scripts are suited to repeatable automated work.You can get the base R language CLI for Windows at https://cran.r-project.org/bin/windows/base/.

RStudio for Windows can be downloaded from https://www.rstudio.com/products/rstudio/download/

All Variables are Arrays

The thing I find the most interesting about R is that all variables are arrays. Ok, to be more correct, I should say object collections. However, R distinguishes different types of collections. A single dimensional array of a data type is called a vector. Even if only one value is stored, it is a one dimensional array. A two dimensional array of the same data type is called a matrix, i.e. just a grid. What R actually calls arrays are what most languages would consider arrays with three or more dimensions. It is important to bear in mind, these are all classes, not simple data types, and we can check on the class name using the class function as shown below.> myvect = 1:10 > > class(myvect) [1] "integer"

The first line above creates a vector (single dimension array) named myvect and initializes it with with element values 1 through 10. The second line is asking for the variable type. Note: Like PowerShell, any variable on a line by itself will be displayed as shown below.

> myvect [1] 1 2 3 4 5 6 7 8 9 10

For analysis, we need complex types and R has them. A List is basically a vector that can hold multiple data types. A data frame is a list of lists, i.e. much like a record set or query result set. There are more powerful classes such as data.table available through R extensions called packages. More on that in another blog.

Operations Work Automatically on the Elements of the Arrays

A fascinating feature and probably one of the reasons R is such a powerful statistical analysis tool is that operations you perform on array variables automatically get applied to the array elements. For example...myvect * 2

[1] 2 4 6 8 10 12 14 16 18 20

Above, by multiplying the vector variable by 2, every element is multiplied by 2. The same happens with other operations and we can use a function called lapply to have a custom function applied to the entire vector as shown below.

> myfunct <- function (x) { x / 2 } > > lapply(myvect, myfunct) [[1]] [1] 0.5 [[2]] [1] 1 [[3]] [1] 1.5 [[4]] [1] 2 [[5]] [1] 2.5

Above is a partial listing. Note: Creating a function looks more like assigning a variable to the function code. Above, the funcion myfunct is being called iteratively for each element in the vector myvect. As you learn more about R, you realize that array processing, or again more correctly, collection processing, is at the heart of the language.

Summary

This was just to give you a flavor of R with a couple of key take-a-ways. One, if you are familiar with a scripting language, you have a jump on R programming. Two, R is designed to work with object collections. Cubes conceptually work with data in N dimensional arrays. I think that conceptual approach serves well for R too.